Cache Miss – What It Is and How to Reduce It

While caching is one of the most vital mechanisms for improving site performance, frequent cache misses will increase data access time, resulting in a poor user experience and high bounce rates.

This article will help you better understand what a cache miss is, how cache misses work, and how to reduce them. Also, we’ll cover the different types of cache misses.

What Is a Cache Miss?

A cache miss occurs when a system seeks data in the cache, but it’s absent, necessitating retrieval from another source. This differs from a cache hit, where the sought-after data is found in the cache.

When an application needs to access data, it first checks its cache memory to see if the data is already stored there. If the data is not found in cache memory, the application must retrieve the data from a slower, secondary storage location, which results in a cache miss.

Cache misses can slow down computer performance, as the system must wait for the slower data retrieval process to complete.

Cache Miss Penalties and Cache Hit Ratio

Caching enables computer systems, including websites, web apps, and mobile apps, to store file copies in a temporary location, called a cache.

A cache sits close to the central processing unit and the main memory. The latter serves as a dynamic random access memory (DRAM), whereas a cache is a form of static random access memory (SRAM).

SRAM has a lower access time, making it the perfect mechanism for improving performance. For example, website cache will automatically store the static version of the site content the first time users visit a web page.

Hence, every time they revisit the website, their devices don’t need to redownload the site content. The cache will take over the query and process the request.

Generally, here are three cache mapping techniques to choose from:

- Direct-mapped cache. It’s the simplest technique, as it maps each memory block into a particular cache line.

- Fully-associative cache. This technique lets any block of the main memory go to any cache line available at the moment.

- Set-associative cache. It combines fully-associative cache and direct-mapped cache techniques. Set-associative caches arrange cache lines into sets, resulting in increased hits.

To help ensure successful caching, website owners can track their miss penalties and hit ratio.

Cache Miss Penalties

A cache miss penalty refers to the delay caused by a cache miss. It indicates the extra time a cache spends to fetch data from its memory.

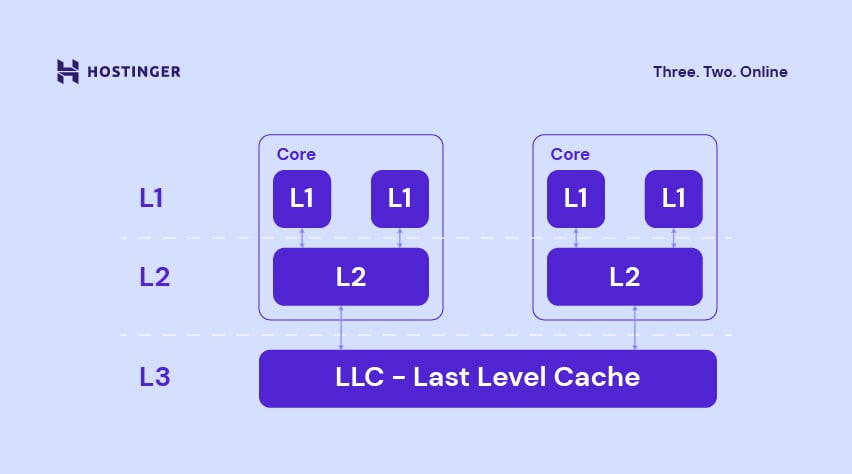

Typically, the memory hierarchy consists of three levels, which affect how fast data can be successfully retrieved. Here’s the common memory hierarchy found in a cache:

- L1 cache. Despite being the smallest in terms of capacity, the primary cache is the easiest to access. L1 accommodates recently-accessed content and has designated memory units in each core of the CPU.

- L2 cache. The secondary cache is more extensive than L1 but smaller than L3. It takes longer to access than L1 but is faster than L3. An L2 cache has one designated memory in each core of the CPU.

- L3 cache. Often called Last-Level Cache (LLC) or the main database, L3 is the largest and slowest cache memory unit. All cores in a CPU share one L3.

When a cache miss occurs, the caching system needs to search further into the CPU memory units to find the stored information. In other words, if a cache can’t find the requested data in the L1 cache, it will then search for it in L2.

The more cache levels a system needs to check, the more time it takes to complete a request. This results in an increased cache miss rate, especially if the system needs to look into the main database to fetch the requested data.

Let’s look at four types of cache misses:

- Compulsory miss. Also called a cold start or first reference cache miss, a compulsory miss occurs as site owners activate the caching system. Misses are inevitable as the caches don’t have any data in their blocks yet.

- Capacity miss. This type of cache miss occurs if the cache cannot contain all the required data for executing a program. Common causes include fully-occupied cache blocks and the program working set being larger than the cache size.

- Conflict miss. It’s also known as a collision or interference cache miss. Conflict cache misses occur when a cache goes through different cache mapping techniques, from fully-associative to set-associative, then to the direct-mapped cache environment.

- Coherence miss. Also called invalidation, this cache miss occurs because of data access to invalid cache lines. It can be a result of memory updates from external processors.

Cache Hit Ratio

The cache hit ratio shows the percentage of requests found in the cache. Calculate the cache hit ratio by dividing the number of cache hits by the combined numbers of hits and misses, then multiplying it by 100.

Cache hit ratio = Cache hits/(Cache hits + cache misses) x 100

For example, if a website has 107 hits and 16 misses, the site owner will divide 107 by 123, resulting in 0.87. Multiplying the value by 100, the site owner will get an 87% cache hit ratio.

Anything over 95% is an excellent hit ratio. That said, check the cache configuration if it’s under 80%.

While tracking the cache hit ratio is essential for every website, manually calculating the value is not the best alternative. Thanks to caching plugins like LiteSpeed Cache, WP Rocket, WP Super Cache, and W3 Total Cache, website owners can automate the process.

How to Reduce Cache Misses?

Cache hit and miss problems are common in website development.

In the case of cache misses, they slow a website down as the CPU waits for the cache to retrieve the requested information from the DRAM.

The drawback of the cache hit ratio is that it doesn’t tell site owners the bandwidth and latency costs to reach the hits. This is because a successful query will count as a hit no matter how long it takes to fetch the information.

For that reason, a high cache hits ratio doesn’t always mean an effective caching strategy. By knowing how to reduce cache misses, website owners can better understand how effectively their caching systems work.

Let’s check out three methods to reduce cache misses.

Option 1. Increase the Cache Lifespan

As mentioned earlier, a cache miss occurs when the requested data cannot be found in the cache. It may happen simply because the data has expired.

A cache lifespan is the time interval from when a cache creates a copy of data until it’s purged. The default setting is 10 hours, but site owners can configure the cache expiry time to better fit their needs.

For example, a website scheduled for a monthly update may not benefit from a short expiry time, like daily or weekly. The process is unnecessary as the website doesn’t have new information to display.

It may cause frequent cache misses as the system will purge and redownload the lost files all over again. Therefore, it’s recommended to set the cache expiry date based on the site’s update schedule.

If you update the site a couple of times a week, set a daily cache expiry time. On the other hand, an hourly expiry time will be more suitable if you update the site every few minutes.

Using a caching plugin, modifying the cache lifespan only requires a few clicks. However, locating the setting can be difficult as each plugin has its own way to call it.

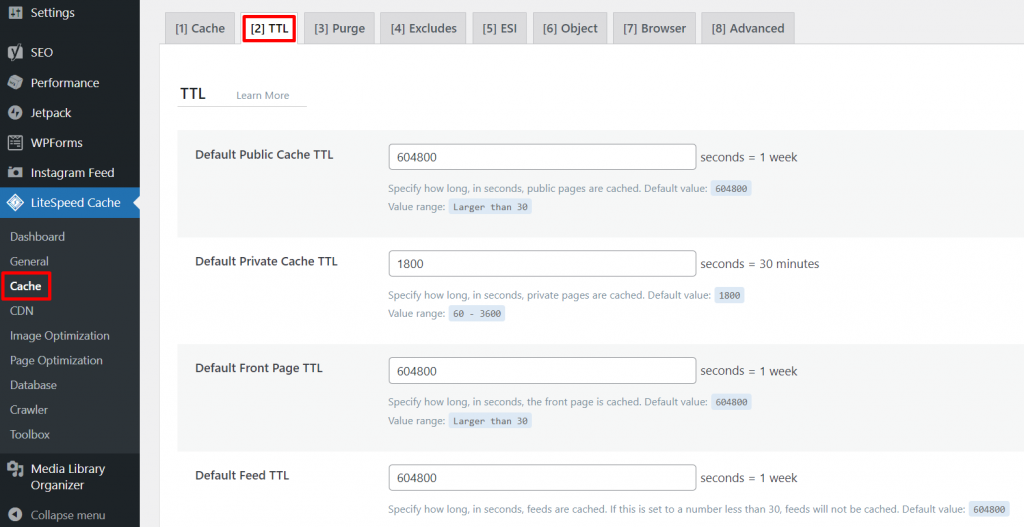

For instance, the cache lifespan setting on LiteSpeed Cache is in the Cache tab under TTL.

Users can configure the cache expiry time for:

- Public pages. They include most of the pages on a website. The possible time-to-live (TTL) values for public pages are one hour (3,600 seconds), one day (86,400 seconds), and two weeks (1,209,600 seconds). As data is rarely updated on these pages, opt for a longer TTL.

- Private pages. This section lets users modify how long until their private pages’ caches expire. Set the values between 60 and 3,600 seconds for the best performance.

- The front page. Configure the cache expiry time for your homepage here. The TTL value needs to be larger than 30 seconds for this page.

- Feeds. They include blog updates and comments. Therefore, feed caches help guarantee up-to-date content for readers. The value range for feed caches must be larger than 30 seconds.

- Representational state transfer (REST). This section enables users to set for how long to cache for REST API calls. REST TTL must be larger than 30 seconds.

- HTTP Status Code Page. This is for setting up TTL for 404, 403, and 505 status codes. The default value is 3,600 seconds, and a short expiry time is suitable for 404 error pages. On the other hand, 403 and 505 errors can use a more extended period.

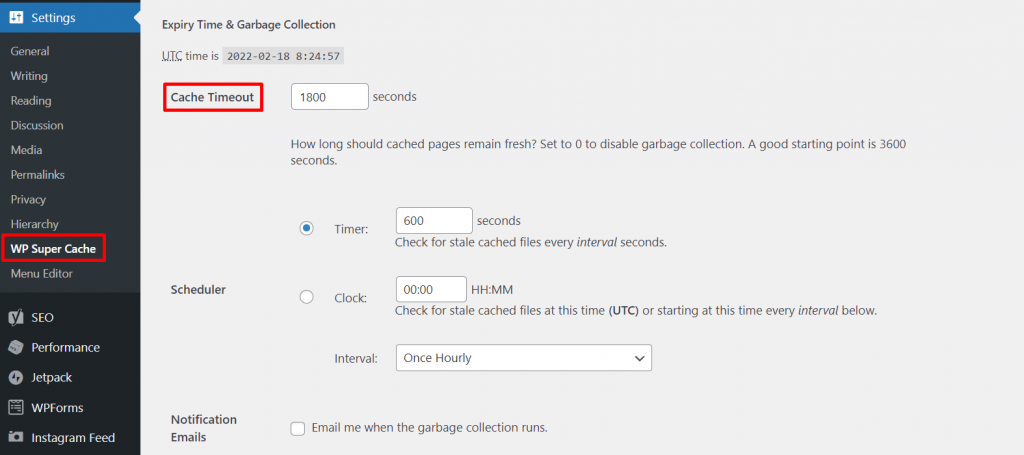

WP Super Cache users will find the cache lifespan settings in Advanced -> Expiry & Garbage Collection -> Cache Timeout.

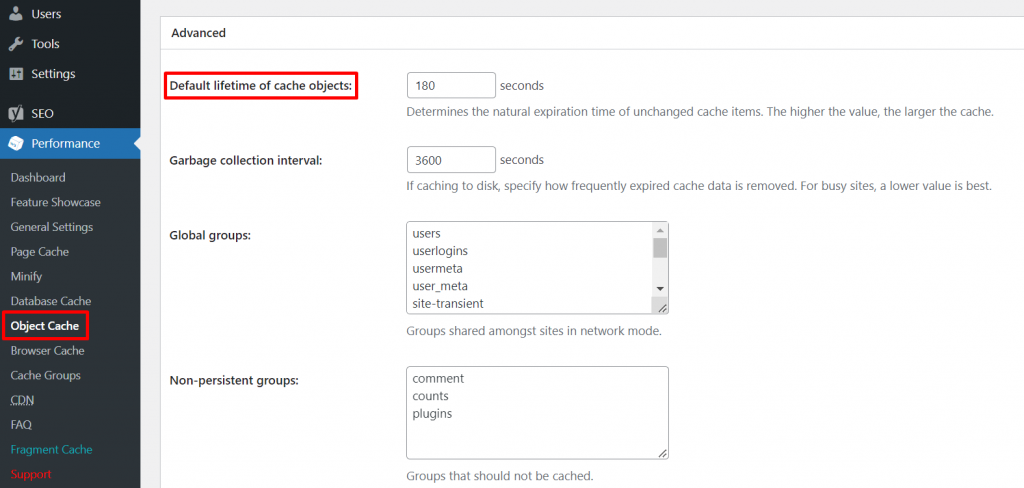

W3 Total Cache users can navigate to Object Cache -> Default lifetime of cache objects.

Pro Tip

The Object Cache (LSMCD) feature greatly reduces the time taken to retrieve query results and improves your website response time by up to 10%.

On WP Rocket, locate the Cache Lifespan section in the Cache tab.

Pro Tip

Keep your cache lifespan within 10-12 hours if your website uses nonces. By default, nonces are only valid for 12 hours. Therefore, the configuration will trigger the caching system to create file copies after purging the old data, preventing invalid nonces.

Option 2. Optimize Cache Policies

Cache policies, or replacement policies, define the rules for how a cache evicts file copies from its memory. Test out different cache policies to find the perfect one for your caching system.

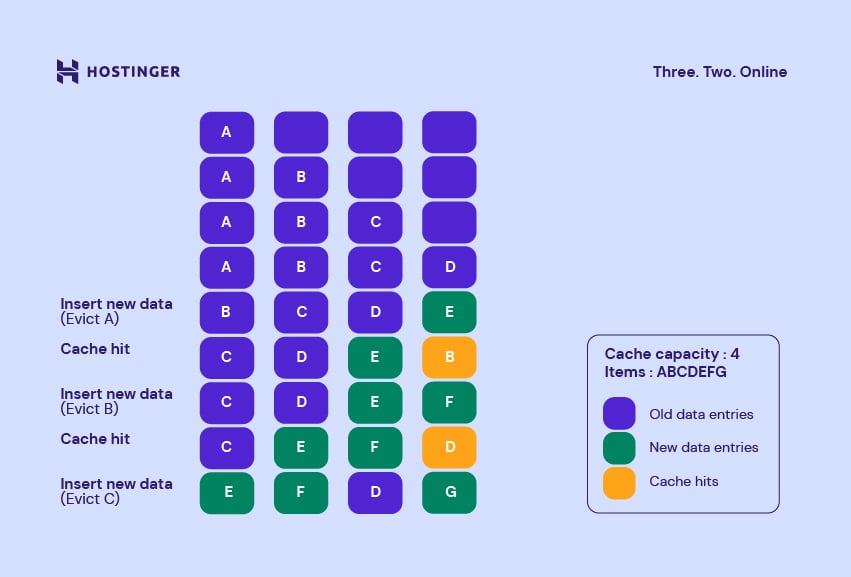

First In, First Out (FIFO)

The FIFO algorithm removes the earliest-added data entries regardless of how many times a cache accessed the information. FIFO behaves like a queue, where data in the head of the list will be the first to be deleted.

In a cache containing items A, B, C, and D, item A is the oldest data entry in the list. If new data is introduced, the FIFO policy will evict A to give room for it.

Here’s an illustration of how it works:

FIFO is best for systems where data access patterns run in progression throughout the entire data set. That’s because this policy ensures all of the data entries in the cache are new and more likely to be accessed multiple times.

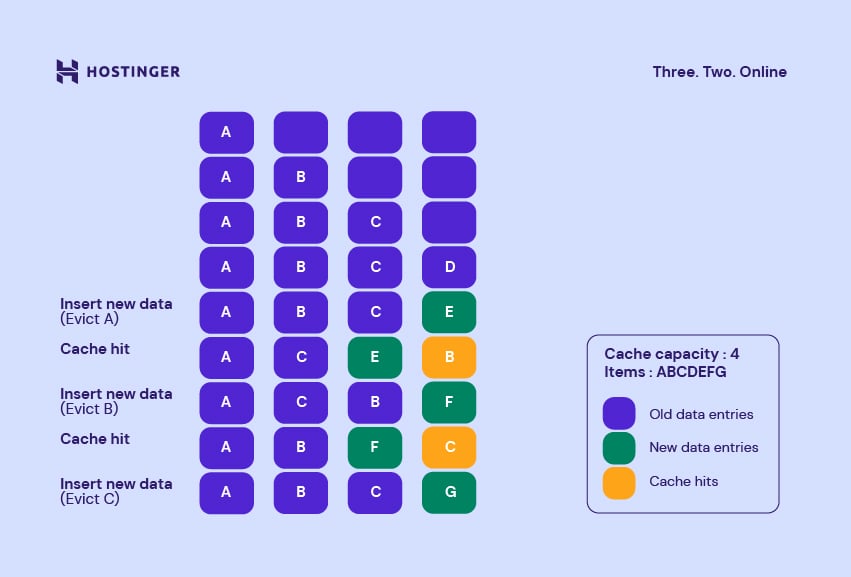

Last In, First Out (LIFO)

The LIFO cache policy evicts the newly-added data entries from a cache. In other words, LIFO behaves like a stack, where data at the end of the list will be the first to be evicted.

In a cache containing items A, B, C, and D, item D is the newest data entry in the list. Therefore, the LIFO algorithm will remove D to accommodate item E.

Here’s an illustration of it:

With such behavior, LIFO can be a perfect cache policy for websites that tend to access the same data subset. That’s because LIFO ensures that the most frequently used data entries will always be available in the cache memory.

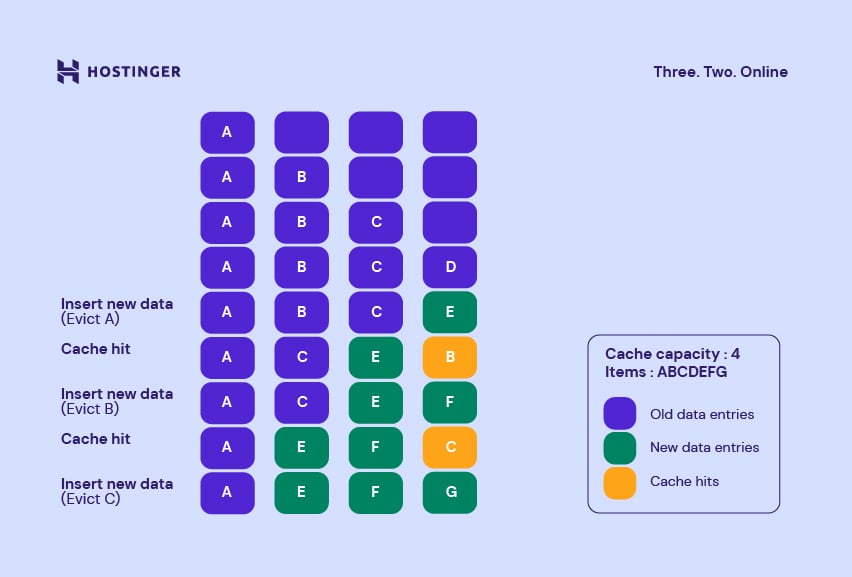

Least Recently Used (LRU)

LRU removes the items the cache hasn’t used for the longest time. Don’t confuse LRU with FIFO, as the former considers cache hits before evicting data entries.

Here’s how the cache replacement policy works:

- In a cache containing items A, B, C, D, item A is the least recently used.

- Due to hits for A and C, the sequence may change its order to B, D, A, C.

- Now, item B is the least recently used data entry. In that case, it will evict item B to create room for E.

The LRU replacement policy is ideal for websites that tend to reuse recent data in a relatively short period of time. It prevents replacing such data by keeping them far from the head of the list.

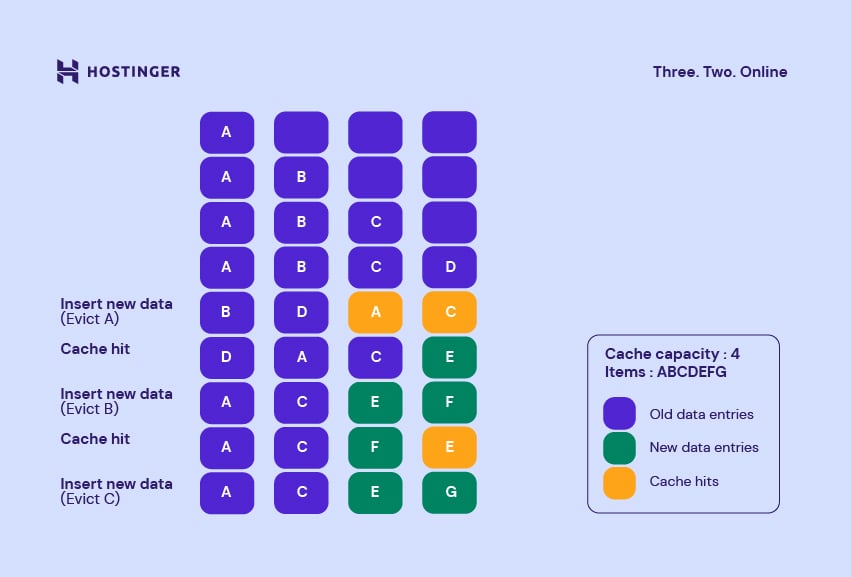

Most Recently Used (MRU)

The MRU algorithm evicts the most recently accessed items to retain older lines in a cache. However, this cache policy can be tricky to use. If a hit occurs on recently used entries, MRU will remove them the next time it needs to make room for new data.

Here’s how this cache replacement policy works:

- In a cache containing A, B, C, D, item D is the most recently used entry. Therefore, MRU will remove D to accommodate E.

- If there’s a request for B, the cache will retrieve it. Consequently, the sequence will turn to A, C, E, B, and item B will be the most recently used item.

- Suppose item F arrives, the cache will evict B and put F in the tail.

Here’s an illustration:

That said, MRU performs well in certain conditions. For instance, MRU helps remove recommendations after customers buy the recommended products or services.

Option 3. Expand Random Access Memory (RAM)

Another tip to reduce cache misses is to expand the main memory (RAM).

The cache memory works by taking and copying data from RAM, where programs used in real-time by the CPU are stored. The larger the RAM capacity, the more data it can accommodate.

Furthermore, larger RAM helps guarantee that the cache doesn’t miss any important data to store, resulting in fewer cache misses.

The main drawback of increasing RAM is the cost. Typically, it requires updating your hosting plan.

Let’s take Hostinger’s cloud web hosting services as an example. Hostinger’s Cloud Startup plan offers 3 GB of RAM, while Cloud Professional offers 6 GB of RAM, and Cloud Enterprise comes with 12 GB of RAM.

At Hostinger, all of our plans are highly scalable. Whether you’re using our Cloud, VPS, or WordPress Hosting services, the process for moving from one plan to another is quick. Therefore, you don’t need to worry about prolonged downtime.

Find out more about the RAM allocation for your hosting plan on our website. Also, our Customer Success team will be glad to help you learn about our hosting plans in more detail.

Conclusion

Caching enables websites and web apps to improve their performance. Set-associative, fully-associative, and direct-mapped cache techniques are three cache mapping approaches that site owners can benefit from.

A cache miss occurs when the requested information cannot be found in the cache. The different types of cache misses include compulsory, conflict, coherent, and capacity cache misses.

Keeping your cache misses low is vital as a high cache miss penalty can harm user experience and increase bounce rate. Let’s do a quick recap on how to reduce cache misses:

- Increase the cache lifespan. This ensures that important data will always be available in the cache memory. Match the lifespan with the update schedule for increased effectiveness.

- Optimize cache policies. They define the rules for how to evict data entries from a cache. Test out different policies to find the best one for your caching system.

- Expand the RAM capacity. As the cache memory feeds on the RAM data, having a larger RAM capacity helps the cache accommodate more files.

We hope those tips on reducing cache misses are helpful. That said, don’t hesitate to leave a comment if you have any questions.

Learn more about improving website performance

Top 10 Strategies to Optimize Your Website

What is SEO

How to Perform a Website Speed Test

How to Clear Browser Cache and History