Robots.txt Disallow: How Does It Block Search Engines

Are you looking for a way to control how search engine bots crawl your site? Or do you want to make some parts of your website private? You can do it by modifying the robots.txt file with the disallow command.

In this article, you will learn what robots.txt can do for your site. We’ll also show you how to use it in order to block search engine crawlers.

What Is Robots.txt

Robots.txt is a plain text file used to communicate with web crawlers. The file is located in the root directory of a site.

It works by telling the search bots which parts of the site should and shouldn’t be scanned. It’s up to robots.txt whether the bots are allowed or disallowed to crawl a website.

In other words, you can configure the file to prevent search engines from scanning and indexing pages or files on your site.

Why Should I Block a Search Engine

If you want to block crawlers from accessing your entire website, or if you have sensitive information on pages that you want to make private. Unfortunately, search engine bots can’t automatically distinguish between public and private content. In this case, restricting access is necessary.

You can also restrict bots from crawling your entire site. Especially if your website is in maintenance mode or staging.

Another use of robots.txt is to prevent duplicate content issues that occur when the same posts or pages appear on different URLs. Duplicates can negatively impact search engine optimization (SEO).

The solution is simple – identify duplicate content, and disallow bots from crawling it.

How to Use Robots.txt to Disallow Search Engines

If you want to check your site’s robots.txt file, you can view it by adding robots.txt after your site’s URL, for example, www.myname.com/robots.txt. You can edit it through your web hosting control panel’s file manager, or an FTP client.

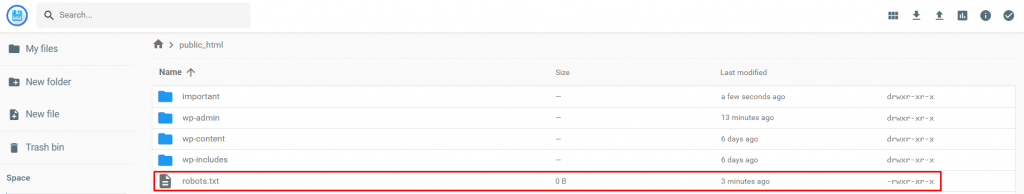

Let’s configure the robots.txt file via Hostinger’s hPanel’s file manager. First, you have to enter the File Manager in the Files section of the panel. Then, open the file from the public_html directory.

If the file isn’t there, you can create it manually. Just click the New File button at the top right corner of the file manager, name it robots.txt and place it in public_html.

Now you can start adding commands to the file. The two main ones you should know are:

- User-agent – refers to the type of bot that will be restricted, such as Googlebot or Bingbot.

- Disallow – is where you want to restrict the bots.

Let’s look at an example. If you want to prevent Google’s bot from crawling on a specific folder of your site, you can put this command in the file:

User-agent: Googlebot Disallow: /example-subfolder/

You can also block the search bots from crawling on a specific web page. If you want to block Bingbot from a page, then you can set the command like this:

User-agent: Bingbot Disallow: /example-subfolder/blocked-page.html

Now, what if you want the robots.txt file to disallow all search engine bots? You can do it by putting an asterisk (*) next to User-agent. And if you want to prevent them from accessing the entire site, just put a slash (/) next to Disallow. Here’s how it looks:

User-agent: * Disallow: /

You can set up different configurations for different search engines by adding multiple commands to the file. Also, keep in mind that the changes take effect after you save the robots.txt file.

Conclusion

Now you’ve learned how to modify the robots.txt file. This lets you manage search engine bot access to your website. Now you can rest easy knowing that only what you want to be found on search engine result pages will show up there.

Learn More About Website Optimization

What Is Website Optimization

What Is a Sitemap

How to Submit Your Website to Search Engines

Website Localization Complete Guide

Comments

December 28 2020

Hi. I have recently purchase a hosting plan to make my own website. I want to know that is it OK to disallow my entire site as it is under maintenance?

February 09 2021

Hi there! Yes, if you're not ready to launch the website, that's perfectly fine - just don't forget to turn it back on when you're done :)

September 21 2021

Thank's A Lot!